Multiple Logistic Regression is used to fit a model when the dependent variable is binary and there is more than one independent predictor variable. Before you proceed, I hope you have read our article on Single Variable Logistic Regression

Other concepts and data preparation steps we have covered so far are: Business Understanding, Data Understanding, Single Variable Logistic Regression, Training & Testing, Information Value & Weight of Evidence, Missing Value Imputation, Outlier Treatment, Visualization & Pattern Detection, and Variable Transformation

We will now build a multiple logistic regression model on the Development Dataset created in Development – Validation – Hold Out blog

Model Development

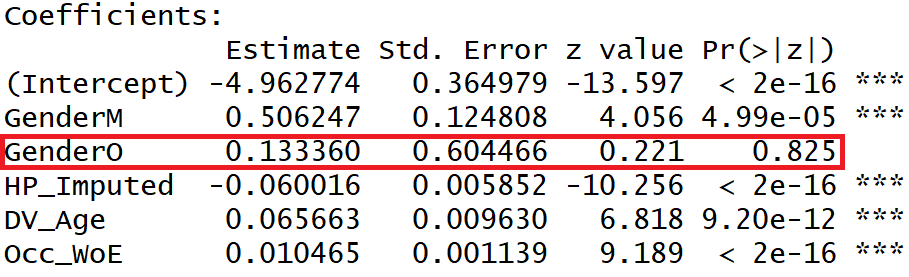

Let us build the Multiple Logistic Regression model considering the following independent variables and alpha significance level at 0.0001.

- Gender

- HP_Imputed

- DV_Age

- Occ_WoE

Interpretation of Model Summary Output

- We have set the alpha for variable significance at 0.0001. The p-value of the Gender variable is more than 0.0001 as such we may accept the Null Hypothesis, i.e. the Gender variable may be considered as insignificant and should be dropped.

- The beta coefficient of the independent variables is in line with their correlation trend with the dependent variable.

- HP_Imputed variable has a negative correlation with the Target phenomenon, hence it’s beta coefficient is negative,

- Whereas the other variables have a positive correlation and accordingly their beta coefficients have come positive.

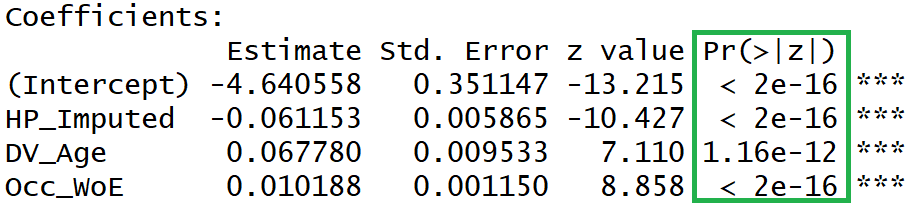

Re-run after dropping Gender Variable

The Gender variable was insignificant in the above model run. As such, we drop the Gender variable and re-run the logistic function.

All the variables are now significant.

Predict Probabilities

# predict code in python dev["prob"] = mylogit.predict(dev) # predict code in R

dev$prob <- predict(mylogit, dev, type="response")

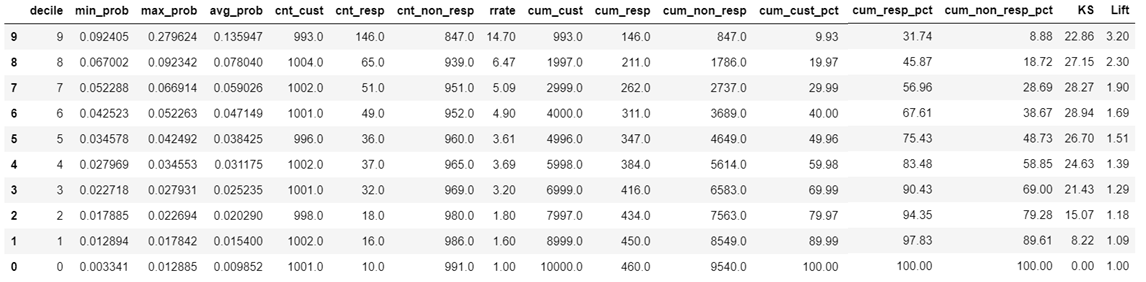

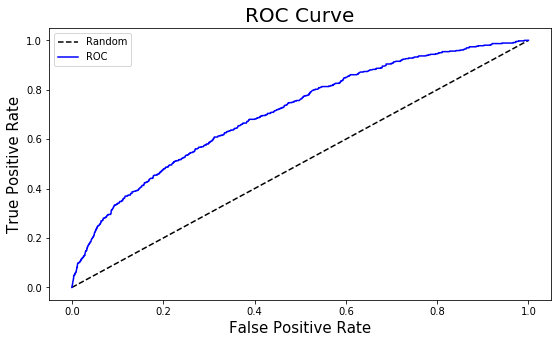

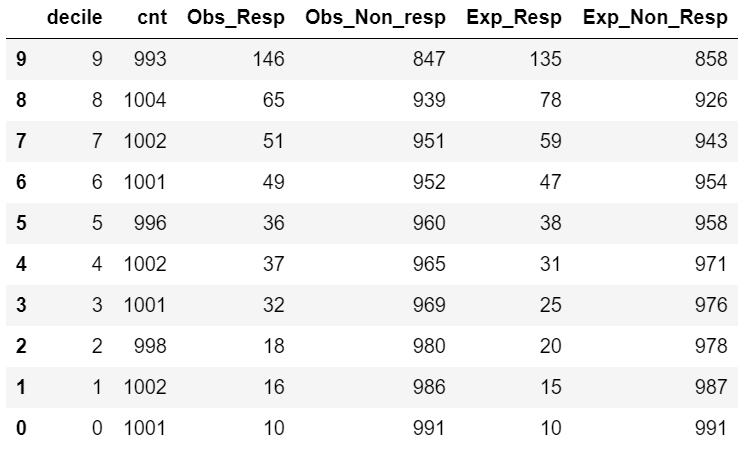

Model Performance Measurement

How good is our model? Is the model usable? Is the model a good fit?

To answer these questions we have to measure the model performance.

(I suggested reading our blog on 7 Important Model Performance Measures before executing the below code)

Practice Exercise

- The KS, AUC, Gini Coefficient of the above model suggests that the model is just fair. It is not a very good model. Improving the above model is an exercise for the blog reader.

- Remember, in the above regression, we have not used the Balance, No. of Credit Transactions, and SCR variable. Try to bring these variables into the model and improve the overall model performance.

- We used the Occ_WoE variable in the above model, however, we could have used the Occ_Imputed or Occ_KNN_Imputed imputed variables as created in the Missing Value Imputation step.

<<< previous blog | next blog >>>

Logistic Regression blog series home

Recent Comments