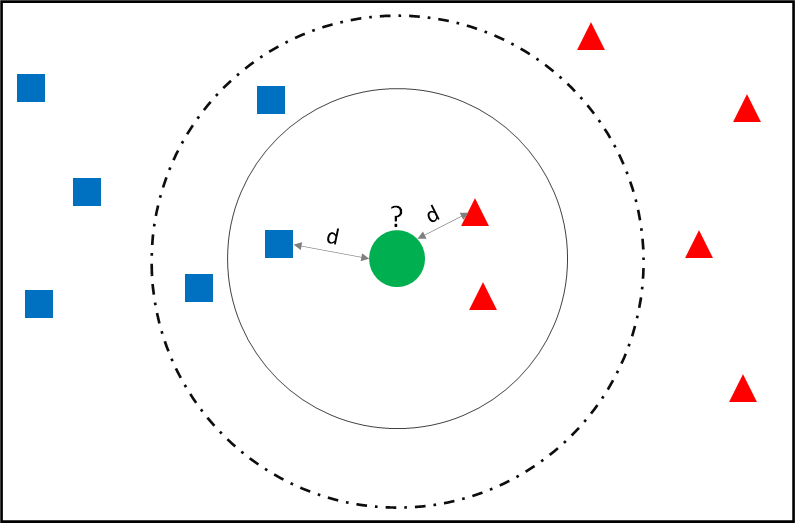

K Nearest Neighbours is the simplest of all the Supervised Machine Learning techniques. The philosophy of the technique is that the class of an unclassified record can be determined from the class of its k adjoining neighbours. Consider the feature image above. What will be the class of the Green Circle?

- If k = 3, then based on three nearest neighbours the Green Dot is more likely to be Red Triangle.

- If k = 5, then based on five nearest neighbours the Green Dot is likely to be Blue Rectangle.

KNN Important Points

- KNN is a Non-Parametric Technique used for Classification & Regression.

- K-NN Classification is done based on the majority vote of its neighbours

- K-NN Regression, the output value is the average of the value of its k nearest neighbours

- The neighbours in KNN can be given Weights. A common scheme is to give each neighbour a weight of 1/d, where d is the distance to the neighbour.

- K-NN is Lazy Learning Technique and simplest of all Machine Learning Techniques

KNN is a Lazy Learning Technique

A supervised machine learning model is built by fitting a model between the dependent and independent variable. However, KNN does not fit any model between the dependent variable (Y) and the independent variables (X) at the time of model development. It simply stores all the records being passed to it at the learning stage, as such, it is called a lazy learning technique.

At the time of prediction, the distance of the new record from all the records in the development dataset is calculated. Based on distance, the k nearest neighbours are selected for voting. This generic KNN algorithm is computationally intensive at the time of prediction. This approach is also known as the brute-force approach.

There are two optimization algorithms in KNN

- KD Tree

- Ball Tree

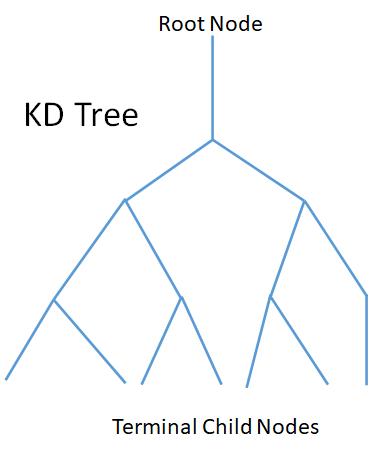

KD Tree – K Dimensional Tree

The KD Tree algorithm is designed to speed up the KNN. Assume you have predictors variables A, B, C, and D. The KD tree computes the variance of all the variables and splits the data at median based on the variable having the highest variance. Say the variable C is first considered. The starting node (root node) is split into two child nodes.

Each of the child nodes is further split by the variable with the next highest variance. The child nodes are split into two halves. This process is repeated until the number of records in child nodes is more than a set threshold limit. Note – The threshold value has to be set more than the K value.

KD Tree – Predict

The KD Tree optimizes the prediction of new unclassified observation. Based on the attribute values of the new record, it will navigate from the root node of the KD Tree to the last level child node. The distance of the new record is computed from the observations in the child node only and the classification is done based on the K Nearest Neighbours in that child.

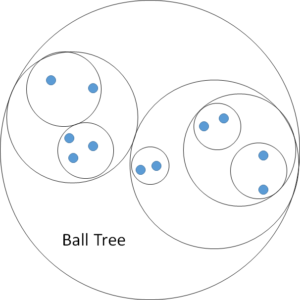

Ball Tree

This is another algorithm to speed up the KNN calculations for prediction on unclassified records. The overall data is split into two almost equal-sized clusters (each resembling a ball). Each ball is further split into two clusters (balls). This process is repeated until the number of records in each resulting split cluster is more than a set threshold limit. Note – The threshold value has to be set more than the K value.

Ball Tree – Predict

The Ball Tree optimizes the prediction of new unclassified observation using Ball Tree search. The ball tree search starts by examining the clusters in depth-first order, i.e. starting at the root. The cluster centroid which is closest to the new record is considered and the others discarded. Based on the search, the new record will eventually end up being considered as closest to only of the terminal clusters. The distance of the unclassified record is then computed from all the observations in the cluster and the classification is done based on the K Nearest Neighbours in that cluster.

Applications of KNN Technique

- KNN technique can be used for Classification and Regression

- KNN is extensively used in Image Processing and Image Classification

- Another major application of KNN is in missing value imputation

Thank you

Recent Comments